Companies like Microsoft won the enterprise AI adoption battle before most organizations realized they were in one. By making Copilot free and enabling it by default across millions of business accounts, Microsoft turned AI from a strategic choice into an operating condition. And for the leaders who postponed governance and security decisions, that shift is now exposing structural gaps in access control, data protection, and oversight that can no longer be deferred or disguised.

We spoke with Steve Harris, the Founder of AI4Enterprise, a GenAI consultancy helping organizations move from AI curiosity to operational value. With over three decades in IT leadership, including interim VP and CIO roles at BC Ferries, Harris brings a systems-level perspective to AI strategy. He believes this unavoidable adoption introduces a new and more permanent form of vendor dependency.

"Microsoft has basically just won the war. Everybody's unstructured data is already in SharePoint, OneDrive, and Microsoft applications. There's this gravity around that data that pulls people toward using Copilot anyway," Harris says. That gravitational pull is exactly what makes the current moment consequential. Microsoft promises data encryption and a commitment that enterprise data will not be used to train models. But those assurances have created a dangerous assumption: that the platform itself handles the governance problem.

Governance debt exposed: "Microsoft solved that platform trust problem," Harris explains. "But what that's done is create a situation where we used to have security through obscurity. If I wanted to search for the salaries of directors, years ago that might have been buried on page 10 of some search result. But now, because Copilot understands the context, you get the answer straight away."

Policy without teeth: Addressing the gap requires more than documentation. "You really can't just rely on having a piece of paper and some training," Harris says. "You've got to actually implement it in technology to stop people from getting access to data they shouldn't have had access to."

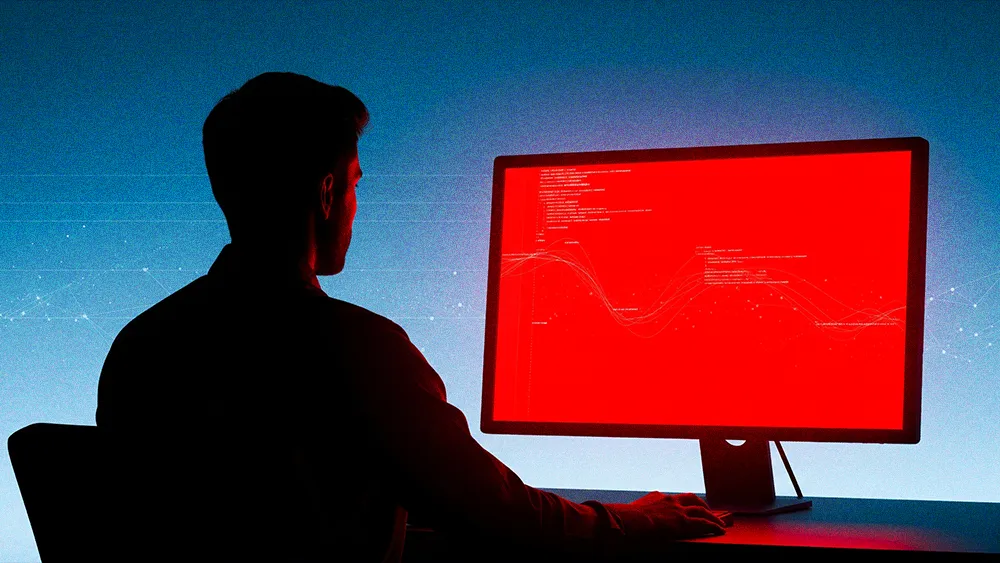

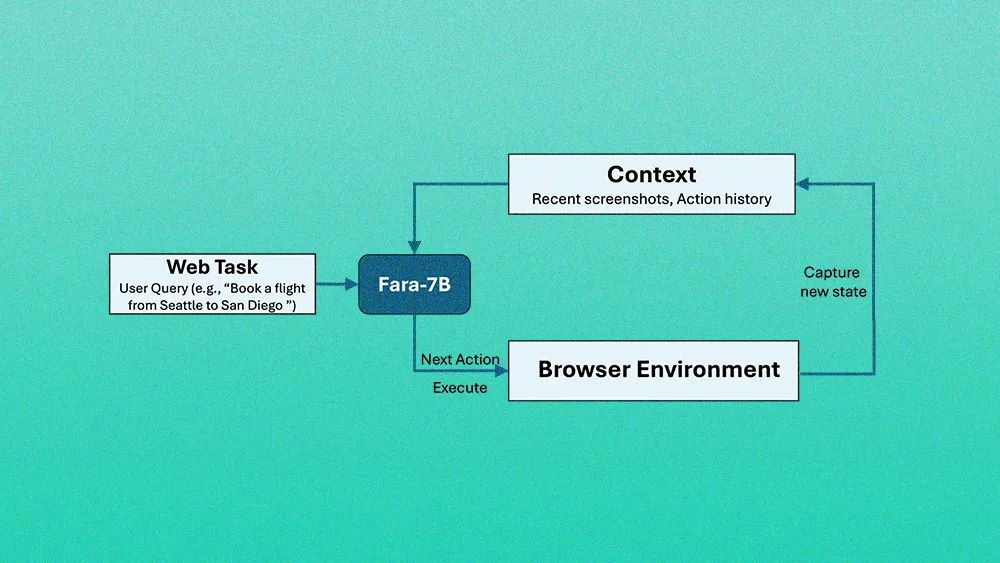

Agentic risk acceleration: The security implications extend beyond Copilot. A new generation of agentic tools, including Perplexity Comet and Opera's Neon browser agent, poses a new threat. "Those tools now have access to files and systems inside organizations, and the attack surface has changed," Harris says. "We could actually end up seeing more of these systems being exploited just because of the way these tools work."

For leaders attempting to navigate the shift, the operational challenge is compounded by a longer-term strategic concern. As AI moves from simple augmentation to full process automation, the nature of platform dependency changes entirely.

From software to labor: "We're moving from software lock-in to labor lock-in. Once you start automating business processes and building digital workers on top of a single platform, the cost of switching doesn't just go up. It starts to feel infinite." The path forward is not to resist adoption, Harris notes, but to approach it with clarity. Organizations with strong foundations in risk frameworks will find this transition manageable. Those without will find their weaknesses exposed at scale.

Risk versus friction: Harris recommends thinking carefully about where AI fits into existing processes. "If you look at a high-risk process, let's say you're responding to RFPs, you're committing the organization to do something. So that needs to have a degree of friction in it. There are some lower-risk activities like matching purchase orders to invoices. That can be much lower risk than committing an organization to a course of action. You need to think about risk versus friction when you're automating these processes."

Cost uncertainty ahead: The final concern is economic. As Microsoft transitions from seat-based to consumption-based pricing models, organizations face a future where AI costs are difficult to predict and even harder to control. "Once you get locked in, you're locked in from a cost perspective as well, not just a technology perspective," Harris cautions. "If we're going down a consumption-based model and we're locked in, the control of those costs becomes a bit of an issue."

Good management, good governance, and a clear understanding of where AI belongs in high-risk versus low-risk workflows remain the difference between durable value and compounding dependency. "It's not really anything new," Harris concludes. "But realizing the changes that these tools can bring, that's what makes you successful."