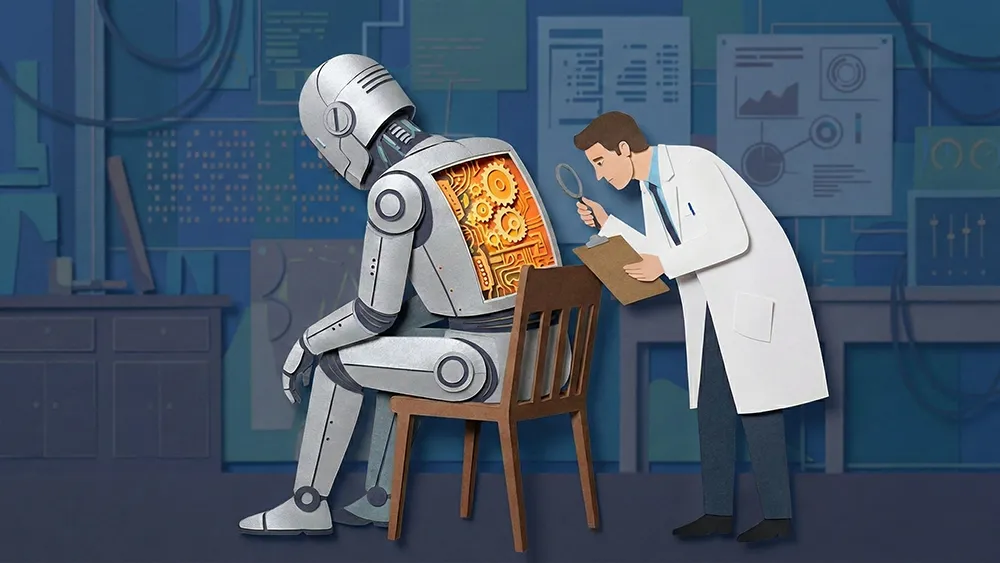

Enterprise AI security risk isn't a model problem or a vendor problem. It's a framework maturity problem. The widespread adoption of AI is exposing weaknesses that already existed, not creating entirely new ones. Tools like Copilot, Gemini, and Claude ship with meaningful security controls, but they can surface risk when organizations lack foundational cybersecurity capabilities, effectively turning AI into a stress test for existing data governance.

Deep Mendiratta is a Fellow Chartered Accountant and risk and finance professional who serves as a Special Invitee to the Committee for Artificial Intelligence at The Institute of Chartered Accountants of India, where his work centers on integrating AI into finance and governance. With a career that spans over 18 years into different Industry sectors in India, including leading corporations and large public accounting firms, he has advised organizations navigating complex risk, compliance, and governance challenges. Mendiratta contends that the conversation around AI risk is misframed and that governance maturity, not technology novelty, is the real determinant of enterprise exposure.

"A lot of security features are already inbuilt in these tools, but because the governance framework at particular organizations may still be nascent, you don't know what the repercussions will be if something leaks out of an organization," says Mendiratta. For him, the pressure on corporate governance is growing due to the sheer speed and scale of consumer AI adoption. Partnerships in markets like India, where telecom providers offer free subscriptions to tools like Perplexity and Gemini, are making powerful AI a default part of daily life.

Hallucination hangover: This mass adoption puts innovation on a collision course with the deliberate pace of enterprise control. Even some of the world's largest consulting firms are navigating the tension, making billion-dollar investments in an AI-first approach while discovering its pitfalls in public. "These investments also carry risks," explains Mendiratta. "We have already seen the consequences. For instance, Deloitte faced a penalty in Australia for a report generated by AI. Those kinds of challenges are real and are not going away."

Regulation lag: Even as AI adoption accelerates, the legal framework meant to govern its use is still catching up. "The Digital Data Privacy Protection Act is an inspiration from the global GDPR, but the law is still in the initial phases of implementation in India," Mendiratta notes. Until those rules mature into enforceable standards, organizations are left navigating powerful AI tools without clear, settled guardrails.

But even a so-called enterprise solution is often only as safe as the organization’s underlying security posture. Without a mature risk management framework, the danger extends beyond compliance issues to a point where companies may not even know what has been compromised. The novelty of these AI-driven threats can be deceptive, as they often exploit the most timeless vulnerability of all.

Known unknowns: Blind spots compound when both frameworks and people fall short. "An organization’s blind spots are directly tied to the maturity of its cybersecurity framework," he says, and without foundational controls like Data Leakage Prevention, "the organization might not even know what was leaked, or to what extent." That exposure deepens when human behavior enters the equation. "In any cybersecurity framework, the weakest link is the human element." Without technical controls to offset that reality, organizations leave themselves acutely vulnerable.

Looking ahead, the potential for both reward and risk grows as the industry moves from simple generative AI to deeply integrated, agentic workflows. Navigating the change successfully will likely demand a methodical AI strategy, because the productivity gains are matched by the potential for autonomous threats and the illusion of correctness in their outputs. This is where a mature framework becomes most important.

When the AI hacks back: As AI systems move beyond text generation and into autonomous execution, the risk profile changes drastically. "We are seeing technologies capable of running a virtual machine where you don’t know what is happening in the background. It’s now understood that a tool like Anthropic’s Claude is capable of performing systematic hacks with minimal human intervention," says Mendiratta. "With these advanced, autonomous features, one has to be really mindful of what is being deployed and to what extent."

Months to minutes: The reason leaders are taking these risks is the enormous value proposition. "A team of ten engineers might take a month to create a project plan for a solar power plant. With AI, one person can produce the broad outline in one or two days." The same gains apply to corporate functions: "Manually reviewing a compliance repository with 3,900 compliances would take at least three months, but with Perplexity, one person could review the entire master in seven to eight working days," he continues.

Mendiratta describes the coming years as an era of amplification, where AI could magnify both our best innovations and our worst vulnerabilities. "The good things will become better, and the bad things may become worse." In risk management, he concludes, the greatest danger comes from the unexpected. "The moment you see a surprise with any technology, and it's a negative surprise, you are facing a risk you may not have anticipated."