AI governance has moved out of binders and boardrooms and into production. As generative and agentic AI take on real decision-making, governance now has to function as a live operating system inside everyday workflows. Outcomes, not policies, are the test. In a world where model behavior is probabilistic and constantly shifting, organizations that rely on static rules will fall behind those that pair clear guardrails with continuous, real-time oversight.

Few leaders have seen this shift play out as directly as Jon Knisley. A veteran of large-scale AI and automation initiatives, he brings more than two decades of experience across global enterprises and government organizations. Currently serving as Global Process AI Lead at the intelligent automation company ABBYY and previously acted as an AI Engineer and Chief Architect for Business Process Transformation at the Department of Defense’s Joint AI Center. That hands-on experience places him at the intersection of AI strategy, governance, and real-world execution.

"AI governance can’t live in the boardroom anymore. If your governance team hasn’t looked at the actual production dashboards, you’ve got a disconnect that’s going to get you in trouble," says Knisley. Organizations that get it right move governance out of isolation and into a shared, cross-functional operating model, with executive accountability, technical insight into model behavior, real-time operational visibility, and legal and workforce considerations all represented.

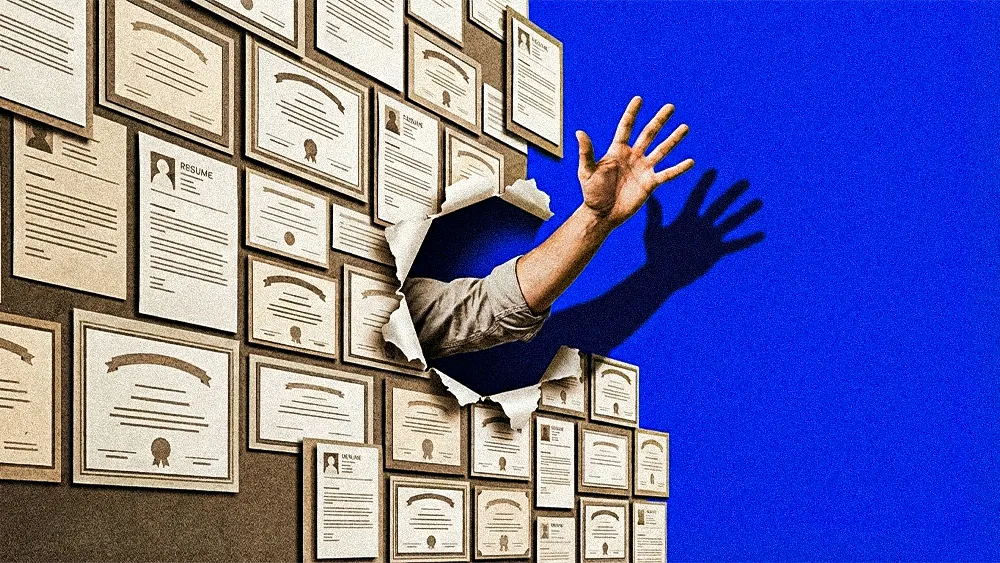

While most organizations have ethical AI guidelines on paper, far fewer have turned those principles into day-to-day practice. The gap between intent and execution is where governance frameworks most often fail. These failures usually appear in predictable ways, rooted in how teams deploy and maintain AI in real business workflows.

Shiny object syndrome: Knisley warns that teams often overreach with generative AI, applying it to problems that would be better solved with simpler, more purpose-built approaches. "A common failure point is shiny object syndrome, where teams apply generative AI to every problem," he explains.

Set, but don't forget: Another frequent breakdown comes from treating AI as a one-time deployment rather than a system that requires ongoing oversight. Models change as data and user behavior shift, making continuous monitoring essential. "Frameworks often break because of the false assumption that an AI, once deployed, is done," Knisley says. The rapid pace of AI is exposing the limits of traditional annual compliance reviews and prompting organizations to adopt real-time KPIs that track performance, effectiveness, and trust.

Quiet compounding: Silent failures can quietly degrade AI performance long before they become visible. "Your model isn't throwing errors and customers aren't complaining, but under the hood, performance is degrading and risk is compounding with every prediction. Eventually, that degradation will surface as a major inaccuracy," Knisley warns. A risk-based approach ensures oversight matches the stakes of each system, with frameworks like the EU AI Act and NIST guiding the allocation of governance resources.

Modern observability platforms provide many of these metrics automatically, and organizations that don't use them risk missing important operational insights. Effective governance is not only about managing risk, it is also essential for enabling innovation, as it is often the main reason pilots do not move into full production. Organizations that get real value from AI combine the right infrastructure and observability with a third, more human element.

The people problem: Many AI projects stall not because of the technology but because organizations have not addressed the people, process, and governance components. As Knisley explains, "We love to buy technology to solve a problem, but the real obstacle is almost always the process and the people." Successful organizations also foster a culture where employees are required to manage AI risk and empowered to raise concerns.

Investing in AI governance now allows organizations to move quickly and avoid the scramble of retrofitting compliance later. Those who build trusted AI proactively will gain a lasting advantage, while others reactively respond to failures or regulations risk falling behind. "Trust is the new competitive advantage," Knisley concludes. "Companies that can demonstrate their responsible AI practices with real, observable evidence will be the ones that command a premium position in the market."