AI is streamlining enterprise workflows, but it's also blurring the lines of responsibility. As automated systems take on more decision-making, it can become unclear who is responsible when things go wrong, especially when organizations lack an evidence-based, reconstructible audit trail of AI actions. That gap turns governance into a systems problem: if AI actions can’t be traced, reconstructed, and owned across platforms, AI decisions exist in production but disappear under scrutiny.

For Russell Parrott, an independent AI Governance Analyst, that tradeoff is a real risk. His approach moves beyond policy checklists to focus on testable frameworks that highlight gaps between corporate claims and actual governance practices. In his books, The Standardized Definition of AI Governance and AI Governance: A Testable Framework, Parrott emphasizes that true AI governance isn’t measured by policies alone, but by a system’s demonstrable, evidence-based reliability.

"The moment we’re no longer in control and AI is making decisions while we only check them, the system is backwards. Humans need to be able to intervene, interrupt, and take over the process seamlessly at any point," says Parrott. The solution, he argues, is a simple demand for proof. Without a strong, evidence-based audit log and clear practices for AI data security, the gap remains. For Parrott, it highlights a fundamental philosophical divide between different approaches to governance.

Proof or performative compliance: Humans must be able to intervene and take control at any point to ensure accountability. "Until we have the practicalities where evidence is maintained by an organization, where we know what model you were using, what version, what time, everything's timestamped, and we can reconstruct it, then the human loop becomes viable," explains Parrott.

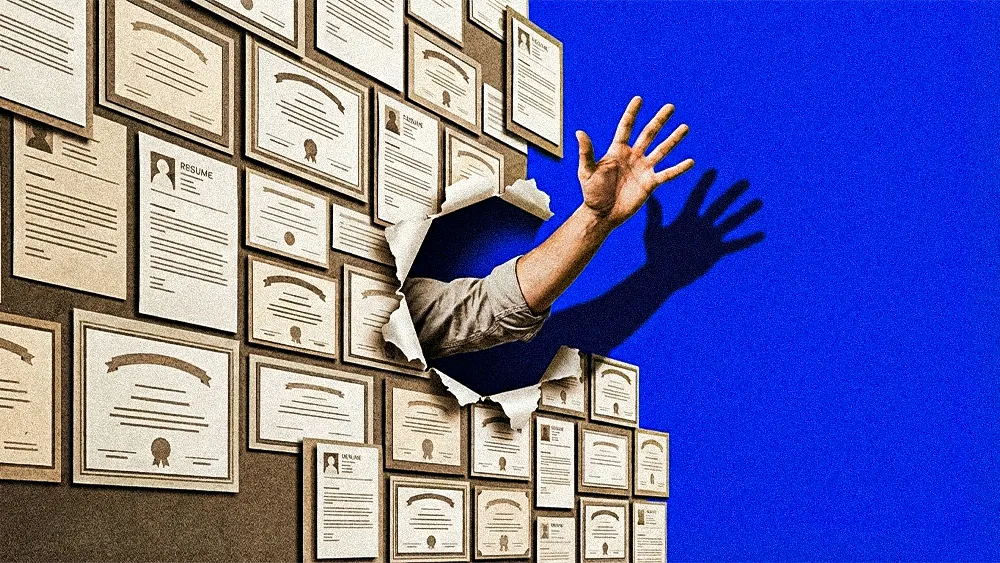

This is increasingly critical as regulators target companies that misrepresent their AI practices and probe gaps in transparency. The challenge is compounded by a corporate playbook that shifts blame onto machines or public figures, sidestepping systemic accountability. At the same time, references to artificial intelligence in S&P 500 companies’ filings have surged roughly 700% between 2019 and 2024, heightening the risk of SEC scrutiny and potential litigation.

Lawsuits loom: As AI systems are pulled into higher-stakes decisions, the inability to produce verifiable evidence is becoming a legal fault line rather than a governance nuance. When organizations cannot reconstruct how an outcome was reached, responsibility defaults to the courts. "Once something goes wrong and you can’t explain how the decision happened, litigation becomes the mechanism for finding the truth," Parrott says. "At that point, policies don’t matter. Evidence does."

Pressure tested: That exposure is compounded by how companies often respond under pressure. Instead of demonstrating control, many rely on abstraction, pointing to tools, vendors, or automated processes in ways that dilute accountability. "You can’t step away from a system and say 'the AI did it.' If there’s no record of what model was used, when it ran, and who was responsible for it, someone else will decide responsibility for you." Parrott sees scrutiny as inevitable. "This is going to get very litigious. And it’s going to get worse for organizations that can’t prove what actually happened," he says.

Ultimately, Parrott frames the challenge as cultural rather than technological. He sees the root of the problem in corporate practices that favor shifting responsibility and minimizing accountability. Addressing this requires more than improved systems or tools. It demands a cultural shift toward personal ownership, where individuals within organizations are empowered and expected to take responsibility for the decisions and outcomes of AI-driven processes. "Nobody wants to take responsibility," he concludes. "And that is a really big problem."