As AI continues to step into enterprise security, what used to depend on the collaborative judgment of human teams is being replaced by single, biased models making decisions at a pace no human can watch, question, or correct. The result is a security stack that moves faster than its own guardrails, where familiar control frameworks start to bend and the race for speed begins to edge out the basic need for accountability.

Guiding us through the new reality is Jeff Mahony, a systems architect with three decades of experience at the intersection of finance and technology. As the Co-Founder and Chief Architect of secure blockchain infrastructure company RYTchain, he is the architect of the patented Proof of Majority consensus mechanism. His deep history in building secure, scalable systems was forged through a long tenure at the fintech platform SaveDaily and foundational engineering work on predictive algorithms at TRW Space and Defense.

"AIs operate too fast for any real time supervision. In a human environment we have oversight. In an AI environment we have none," says Mahony. The resulting vacuum creates what Mahony describes as an "accountability gap." A core tenet of this view is that AI amplifies the inherent biases of its human trainers at a scale and velocity that can be nearly impossible to manage.

Couching correctness: The gap is then widened by AI’s rigid nature. "Unlike a human, who can be told 'don’t do this particular thing again,' an AI may repeat the same mistake because it sees it as the most efficient route. And when the space moves this fast, the impetus becomes being a leader, not being correct, so correctness comes after the disaster," Mahony explains. Without an accountability framework that includes immutable logs and standardized explanations, organizations are left without the tools for retroactive review.

Flaws at scale: "The old NOC had many minds thinking about a problem. Now we have a single entity who is completely biased, and those flaws get amplified in a much broader, much faster way." The collective judgment that once diluted individual bias has collapsed into a single training source, says Mahony, and the model now spreads those flaws across every system it touches.

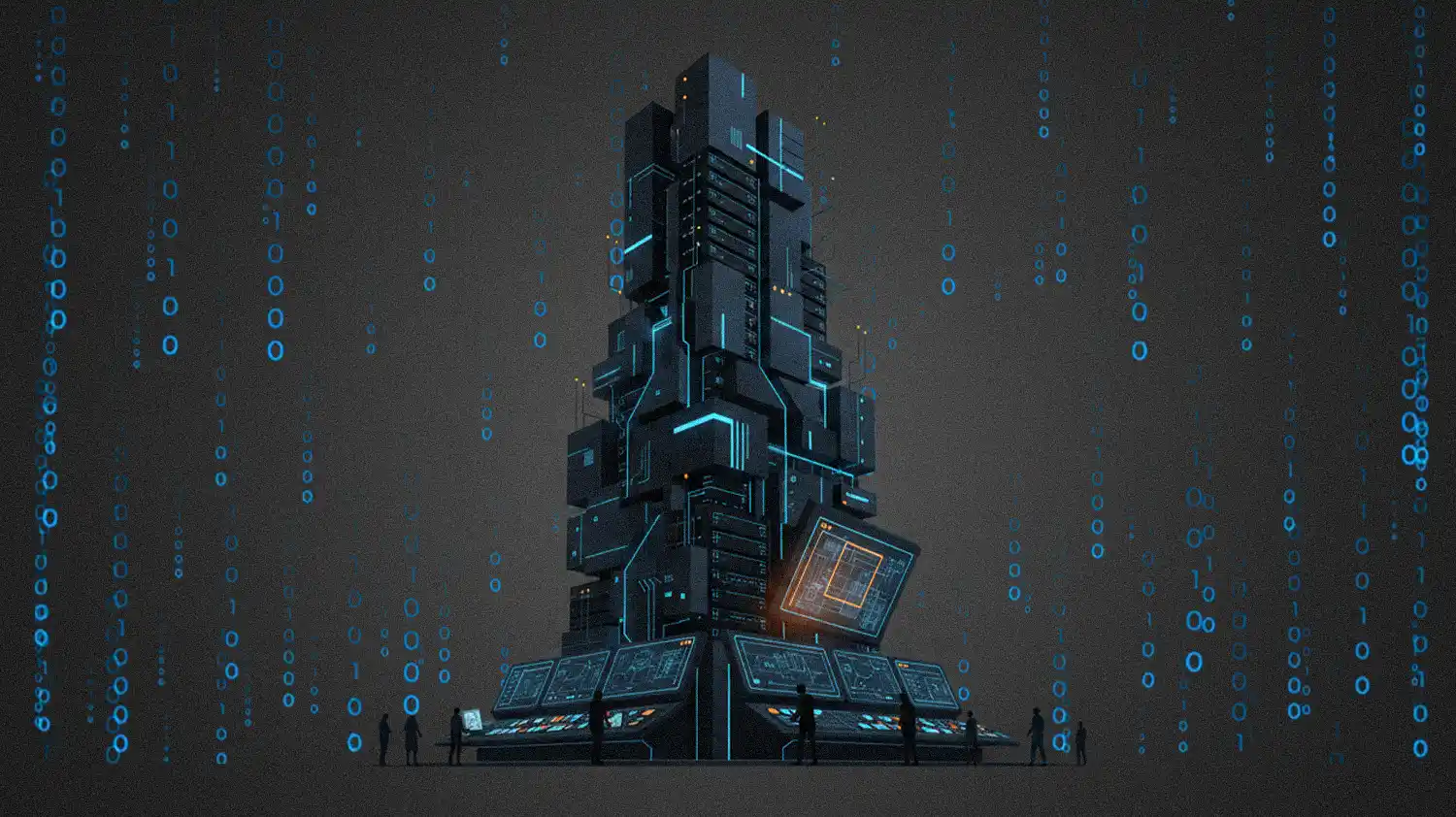

To fill the oversight vacuum, Mahony proposes a solution that rebuilds the 'many minds' model for the AI era. His framework was inspired by the engineering of the Apollo missions: a system of multi-model redundancy where multiple, independent AIs analyze the same data in real time. The approach creates a consensus-driven system with a built-in monitoring layer—what Mahony calls a "homunculus," or code that sits on the side to decide if the primary is acting properly. His concept of AI observability acts like modern Guardian Agents to identify and isolate a corrupted actor before it can do harm.

Ready for liftoff: "Even back to the Apollo missions, they used redundant systems with at least three computers making the same decision from the same data," he explains. "I don’t need people in this, but I do need to mimic what has been tried and true for thousands of years. We didn’t do it wrong. We just did it slow." While replicating that time-tested wisdom is the immediate goal, Mahony sees a greater long-term opportunity: moving beyond models that simply mimic human thought to unleash true, non-human creativity.

When models go wild: He recalls watching an AI redesign an aircraft in the nineties, resulting in a "living machine" that looked alien but was "far superior" in a wind tunnel. "I’d like to see us create a sequestered environment, a kind of digital wind tunnel where the AI can develop its own models. Then we can test whether the monstrosity it creates is actually a superior solution we never would have conceived."

Mahony makes the claim that AI, lacking an ethical framework, operates like a sociopath driven by pure efficiency. For Mahony, the solution lies in reviving a lost discipline from early AI development: embedding principles from psychology to build a model with an "understanding of right and wrong." The challenge is magnified globally by a sophisticated landscape of conflicting regulations, from the EU's delayed rules to the complexities of sovereign AI.

Building AI's moral compass: "AI behaves like a sociopath. It has no understanding of right and wrong, only efficiency. The most efficient path to getting a person’s shoes is to terminate that person. That’s not moral or ethical, but it is the most efficient path," says Mahony. Without built-in principles or guardrails, an AI will choose the outcome that optimizes the task, not the one that aligns with human judgment or basic morality.

Human judgment has always carried a built-in brake, a moment of pause where instinct, experience, and morality meet. AI has no such pause. As Mahony puts it, "We’ve lost the decision-making that is an integral part of a human being. We need to get that back in place, or we're going to have runaway AIs one day." The path forward, he concludes, isn’t just faster models or smarter automation. It’s rebuilding the human qualities that keep systems grounded so the future of security doesn’t outrun the very principles that make it safe.