Many enterprises are building AI on a house of cards in the rush to deploy. Often so focused on the model itself, most have neglected to consider what it consumes. Now, the lack of a proper data foundation could be causing AI governance efforts to fail. Instead of a powerful tool, the result for many organizations is a "black box" that produces unreliable results, reinforces societal bias, and exposes the business to significant legal risks.

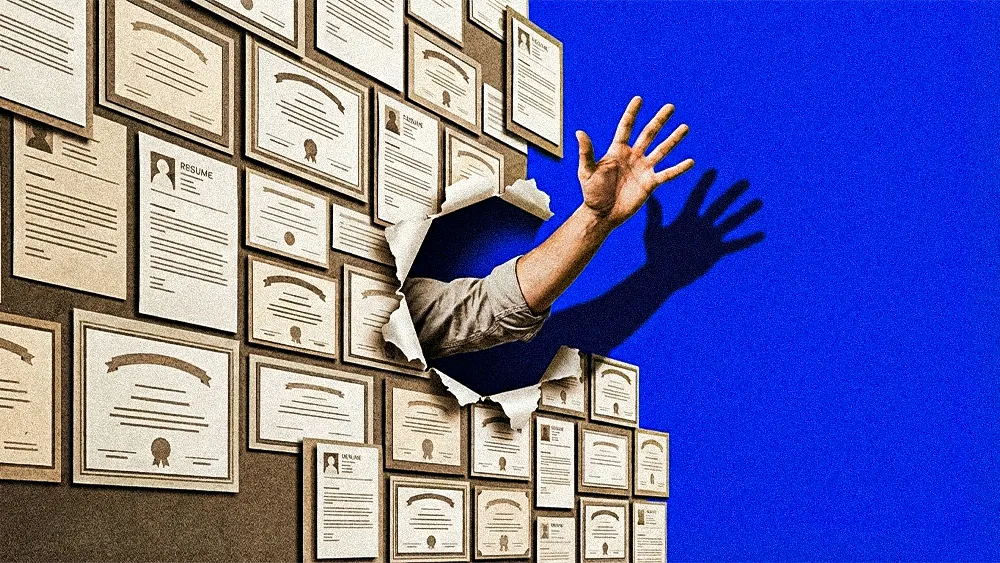

For an expert's perspective, we spoke with Shivam Shukla, a data and AI governance specialist at a global trade and logistics company. As a Certified Data Management Professional (CDMP) and a Global AI Delegate (GAFAI), Shukla has spent his career navigating this challenge in data-centric roles at companies like PureHealth and Informatica. For him, solving the AI governance puzzle means addressing a fundamental question of ownership that many leaders tend to overlook.

"The one thing that bridges both data and AI governance is accountability. You have to be accountable for how your data is managed, shared, and used, as well as for its quality. That same principle extends to AI. You must be accountable for any model bias, the data it is trained on, and whether you are properly retaining, using, or sharing PII. It all works around accountability," Shukla says.

The fine print: It’s a level of corporate responsibility in contrast with the often-casual attitude many people have toward their own data, he explains. "How many of us read the consent form—that consent tick box that we click on? We don't actually read that, and you'll be surprised if we do."

But that accountability begins long before an AI model is ever trained, Shukla continues. Without a proper data foundation, organizations can be left trying to manage the outputs of a "black box" they cannot see inside, which can lead to unreliable results and reinforce existing societal biases.

First things first: Here, Shukla cites a well-known example where a large language model defaults to assigning a male doctor and a female nurse—a phenomenon validated by additional research on AI-driven gender stereotypes. In most cases, the AI's logic reflects the bias inherent in the data it was trained on. "The first step to proper AI results or proper AI governance is data governance. You need to make sure your data is AI-ready, that it is not skewed, and that it has been collected without bias."

Strategic sampling: Offering a more nuanced view, Shukla explains that in certain business contexts, intentional bias can be a necessary tool for strategic focus. "When launching enterprise-level software, the only thing that matters is what the 15% who are actually going to use it are saying. That becomes the bias, which means taking the opinions of the actual or potential customers who may actually use it."

While governance functions have historically faced resistance, Shukla sees attitudes improving as organizations become more willing to be transparent. Still, he says, a common misconception is that oversight frameworks stifle progress.

Not a roadblock: Instead, Shukla frames governance as a fundamental prerequisite for responsible development. "A lot of organizations think that having AI governance in place would limit the scope of AI, but it's nothing like that. It's just there to make sure that we act responsibly and are accountable for our actions. We can't just wave it off."

Today, that responsibility is a pressing legal consideration, Shukla continues. In a globalized workforce, data accountability transcends national borders. With the extraterritorial reach of regulations like the GDPR—a phenomenon often referred to as the "Brussels effect"—enterprises are exposed to significant legal risk, Shukla says. "Someone from Europe is working in India, the GCC regions, New Zealand, or Australia. They are all subject to GDPR laws. But how many companies or organizations take accountability for that? It's something that's often overlooked."

Describing this as a fundamental gap in corporate governance, Shukla points to the need for a more rigorous approach. "How is the data being transferred? The way we manage data globally requires a more authoritative implementation of governance. It cannot be overlooked." When asked to point to a "hero" organization serving as a positive role model, however, he politely dismisses the premise. Instead, he offers a blunt assessment of the industry, a statement on which he insisted on being quoted: "Most enterprises overlook it," he concludes.