The promise of an AI-powered industrial revolution is hitting a physical wall. The sensors, servers, and controllers in our power grids and factories were never designed for such intense demands. Across high-stakes sectors, expensive AI projects are collapsing under the proverbial weight of the foundational hardware they run on. Despite the prevailing "software-first" mentality, the most significant barrier to AI adoption could be physical rather than digital.

That’s the reality for Hamza Kamaleddine, a Lead Engineer/Architect at Honeywell. With over 21 years of experience, Kamaleddine's work in the smart energy and thermal solutions sector gives him a unique perspective on the utilities industry today. In his current role, he oversees projects at a massive scale, including one in the Netherlands that manages millions of devices. When it comes to deploying AI into these environments, Kamaleddine says hardware is the linchpin.

"The hardware part is the main concern for AI. If the infrastructure and resources aren't studied correctly from that perspective, pilot models may collapse," Kamaleddine says. When you attempt to run fast-moving IT, such as AI models, on slow-moving, legacy OT, the system risks instability, he explains. This bottleneck highlights a fundamental conflict between the world of industrial operations (OT) and modern computing (IT).

The weight of computation: Modern AI systems are already pushing hardware beyond its original design limits. "AI systems, especially modern deep learning models, rely heavily on specialized hardware because of how computationally intensive they are. Training large models requires enormous amounts of matrix math, while inference needs fast, efficient hardware for real-time responses," Kamaleddine explains. "In simple terms, better hardware means faster training, lower cost, and more capable models."

But hardware alone can’t carry the full weight of progress. Kamaleddine emphasizes that true performance gains also depend on algorithmic innovation, data quality, and optimization software such as PyTorch and CUDA. The leap from RNNs to Transformers, for instance, didn’t require new chips—it redefined what existing ones could do.

Copilot can't fly solo: Kamaleddine offers a software analogy for the hardware trap, noting that even the most advanced tools struggle to patch a crumbling foundation. "GitHub Copilot is very helpful for new requirements. But if a system has been around for a long time and the code is written in an old way, making changes requires a lot of focus and is very time-consuming, even with the use of GitHub Copilot."

The human hardware: But the problem runs deeper than silicon and servers, Kamaleddine says. The greatest bottleneck may actually be the "human hardware"—the entrenched mindsets and organizational structures that resist the change AI demands. "AI is still a new mindset. All the teams have to change their mindset about the empowerment that AI can provide them. With AI, the entire organizational structure will have to change, and this will undoubtedly affect job descriptions and the roles each person plays."

But if legacy hardware is the wall and human mindset is the hurdle, how do high-stakes industries move forward? For Kamaleddine’s team, the answer is a cloud-first strategy and relentless, large-scale testing.

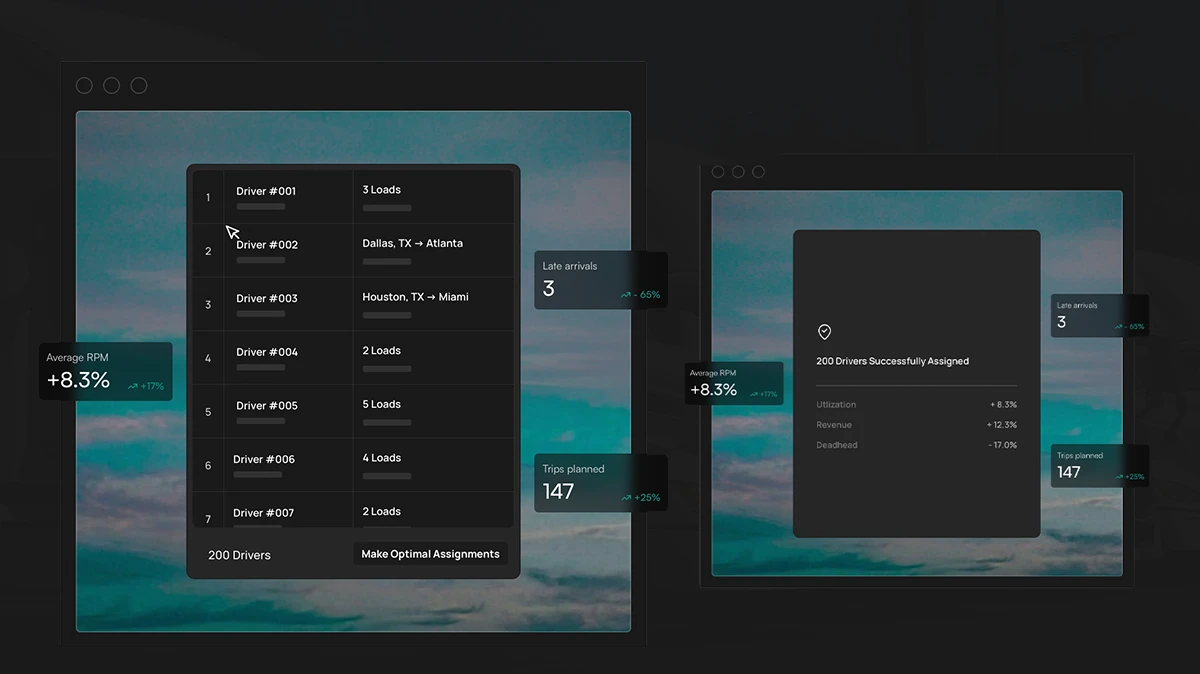

Rigorous, continuous testing: Before going live, they create a parallel pilot environment that mimics the client's exact operational scale, running up to a million simulated meters in parallel to test and monitor performance. "We use several pilot environments and we run lots of internally developed tools to test the performance on 1,000 devices, 10,000, 1,000,000, and so on," Kamaleddine explains. These tests are run for each cycle of the development process to ensure resilience before deployment.

For Kamaleddine, the takeaway is a realistic counter-narrative to the hype: success in this new era may depend less on software talent and more on a willingness to make the long-term investments to upgrade physical infrastructure. Ultimately, the same technology that creates new risks also provides our most powerful tools to combat them, he concludes. "With the use of AI, we'll have much more power to test our tools and test our development cycles in a much more secure way. It could expose several security leaks or vulnerabilities that were not considered during the product's design. With the use of AI, it's giving us much more power to do that."